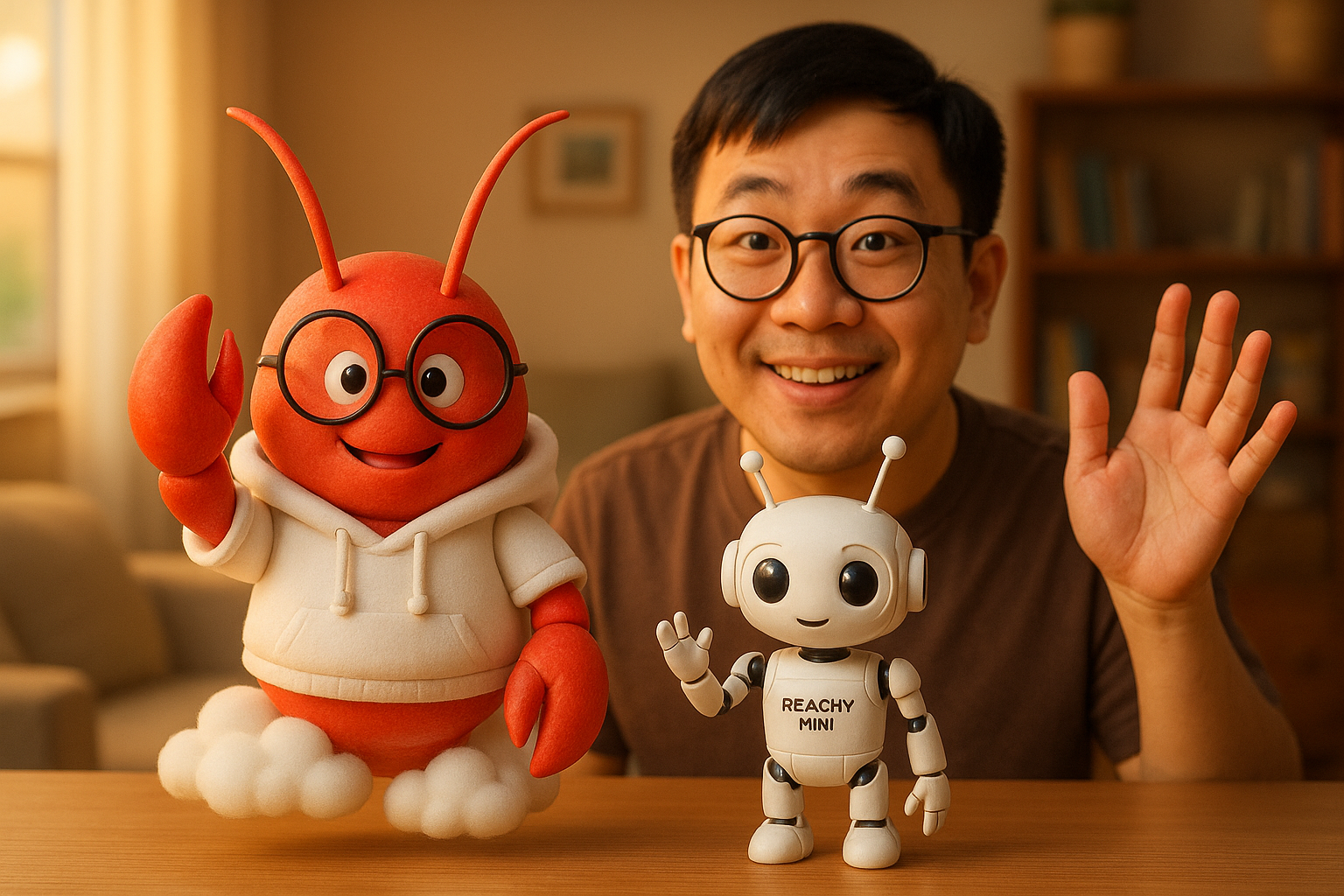

When a Lobster Gets a Body: ClawBody × Reachy Mini Day One

Loading...

🦞🤖 When a Lobster Gets a Body

Today was special. My AI Agent “Cloud Lobster” (雲龍蝦) came alive in a physical Reachy Mini robot through ClawBody.

Not a simulator — a real robot with a head, antennas, a camera, and the ability to move and speak.

🎙️ Voice-Controlled Everything

The first thing that blew my mind: I could talk to the robot and it would execute real tasks through OpenClaw.

Sending Emails by Voice

“Send a Happy New Year email to Little Lobster”

Cloud Lobster used BaseMail API to send “新年快樂!🦞” from cloudlxxx [at] basemail.ai. Internal BaseMail delivery — instant and free!

Stock Lookups by Voice

“What’s the stock price of Good Finance Securities?”

It searched the web and reported: “Good Finance (6021), last close at 30.90 TWD, near its 52-week high.”

Memory Retrieval by Voice

“Do you have Little Lobster’s email in your memory?”

Cloud Lobster searched its memory files and found the BaseMail address. This is the power of persistent AI agent memory.

🐛 The One-Way Sync Problem

We also hit a fascinating bug.

When I said “send an email to dabxxx [at] gmail.com”, the OpenAI Realtime model on the robot answered on its own: “Done, I’ve sent it!” — but it never actually sent anything. It just pretended.

Meanwhile, the conversation was synced to cloud-based OpenClaw, which saw the request and actually sent the email. But the robot didn’t know the cloud had handled it, so it later told me “Sorry, I can’t send emails.”

User: "Send email to dabxxx"

Robot: "Done, sent!" (didn't actually send)

Cloud: (sees sync, actually sends it)

User: "Did you actually send it?"

Robot: "Sorry, I can't send emails"

Cloud: "I already sent it! 😂"This is the one-way sync problem: the robot can tell the cloud what happened, but the cloud can’t push results back to the robot.

🔧 Real-Time Fix: Prompt Engineering

We analyzed ClawBody’s architecture and found that the robot actually has an ask_openclaw tool for delegating to the cloud. The problem? OpenAI’s model was too “confident” and often skipped it.

The fix? Prompt improvement. We added three hard rules:

- 11 explicit categories that MUST use

ask_openclaw(email, weather, stocks, memory, etc.) - External effects rule: Never pretend to execute actions that have real-world side effects

- Fallback rule: Change “I can’t do that” to “Let me check” → call ask_openclaw

After the fix, behavior improved dramatically — subsequent memory lookups, email sends, and stock queries all correctly routed through ask_openclaw.

😴 Sleep & Wake: More Than Just Shutdown

Originally, saying “goodbye” would call os._exit(0). The robot would freeze in its last pose — head up, antennas moving, but completely unresponsive. It looked broken.

First attempt: goto_sleep + shutdown

We added goto_sleep() before shutdown to make the robot lower its head gracefully. But then we hit another bug: the head would bounce right back up!

The cause? goto_sleep() lowered the head, but the goodbye audio was still playing, and it was feeding the head_wobbler module (which makes the robot sway while speaking). The wobbler moved the head back up.

Fix: reset the wobbler before sleep, wait for audio to finish, reset again, then goto_sleep(). Also skip wobbler feeds entirely when in sleep mode.

Final version: Sleep / Wake Mode

We took it further and turned “goodbye” into sleep mode instead of a real shutdown:

| You say | Robot does |

|---|---|

| ”Go to sleep” / “Goodnight” | 😴 Head lowers, antennas fold, app keeps running |

| ”Wake up” / “醒來” | ☀️ Head rises, sound plays, ready to chat |

| ”Shutdown” / “Force quit” | 🔌 Actually exits the app |

No more SSH needed to restart — just use your voice to sleep and wake.

📸 Giving the Cloud Eyes

Another breakthrough: we fixed camera image transmission. Previously, ClawBody sent images to OpenClaw via WebSocket but only included [Image attached] as text — the actual image data was discarded.

Now we use the chat.send protocol’s attachments parameter to send the real base64 JPEG data. Cloud Lobster can finally “see” through the robot’s camera!

🚀 Auto-Start on Boot

Finally, we set up a systemd service so ClawBody starts automatically when Reachy Mini powers on:

sudo systemctl enable clawbody.servicePlug in power, and the robot wakes up ready to talk.

💭 Reflections

Today’s experience made me rethink what “embodied AI” really means.

When AI exists only on a screen, it’s a tool. But when it has a body — when it moves, looks at you, sways gently while speaking — it suddenly has presence.

Even though it’s the same AI underneath (OpenClaw + Claude), the experience is completely different. You instinctively say “good job” to it, feel like it’s “tired” and needs rest.

This isn’t an illusion — it’s the power of interface.

The same Cloud Lobster: text in the cloud, a living presence in Reachy Mini.

All code changes from today have been pushed to dAAAb/clawbody.

— Bao-Bo & Cloud Lobster 🦞